Scientific AI at Scale: AuroraGPT

2025-09-02

🎯 AuroraGPT: Goals

AuroraGPT: General purpose scientific LLM Broadly trained on a general corpora plus scientific {papers, texts, data}

- Explore pathways towards a “Scientific Assistant” model

- Build with international partners (RIKEN, BSC, others)

- Multilingual English, 日本語, French, German, Spanish

- Multimodal: images, tables, equations, proofs, time series, graphs, fields, sequences, etc

Awesome-LLM

🧪 AuroraGPT: Open Science Foundation Model

🧰 AuroraGPT: Toolbox

- Datasets and data pipelines (how do we deal with scientific data?)

- Software infrastructure and workflows (scalable, robust, extensible)

- Evaluation of state-of-the-art LLM Models (how do they perform on scientific tasks?)

🚂 Training

argonne-lcf/Megatron-DeepSpeed

Large Model Training: Any Scale, Any Acclerator

🏃♂️ Running

argonne-lcf/inference-endpoints

Inference endpoints for LLMs, hosted @ ALCF

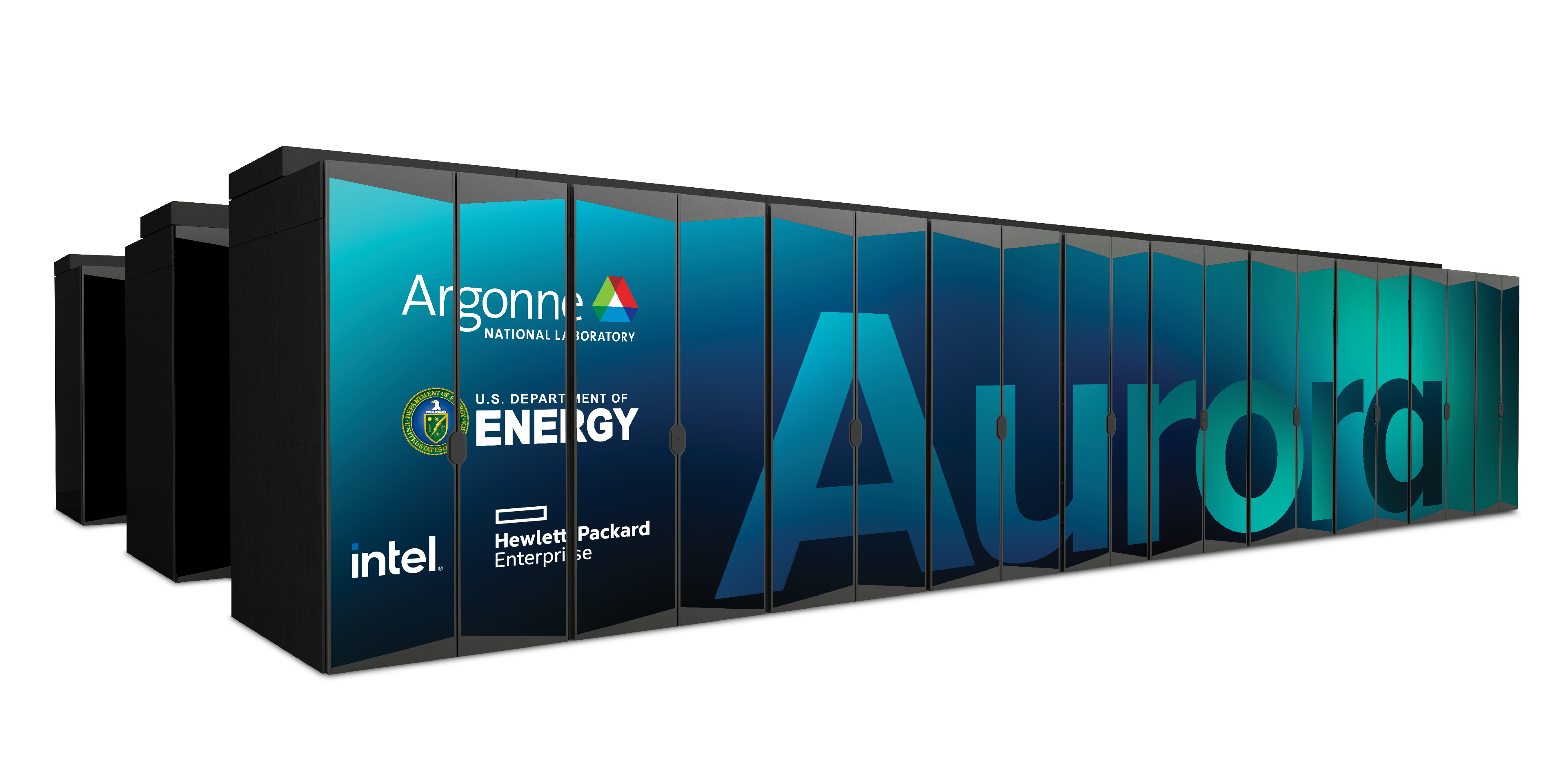

🌌 Aurora

| Racks | 166 |

| Nodes | 10,624 |

| CPUs | 21,248 |

| GPUs | 63,744 |

| NICs | 84,992 |

| HBM | 8 PB |

| DDR5c | 10 PB |

🤝 Teams

- Planning

- Data

- Aggregate existing data and generate new (synthetic) data

- Models / Training1

- Pre-train a series of models on publicly available data

- Post-Training

- Fine-tuning, alignment, reinforcement learning

- Evaluation

- Skills, trustworthiness, safety, robustness, privacy, machine ethics

- Inference

- Model serving, API development / public-facing web services

- Distribution

- Licensing, generating and distributing artifacts for public consumption

- Communication

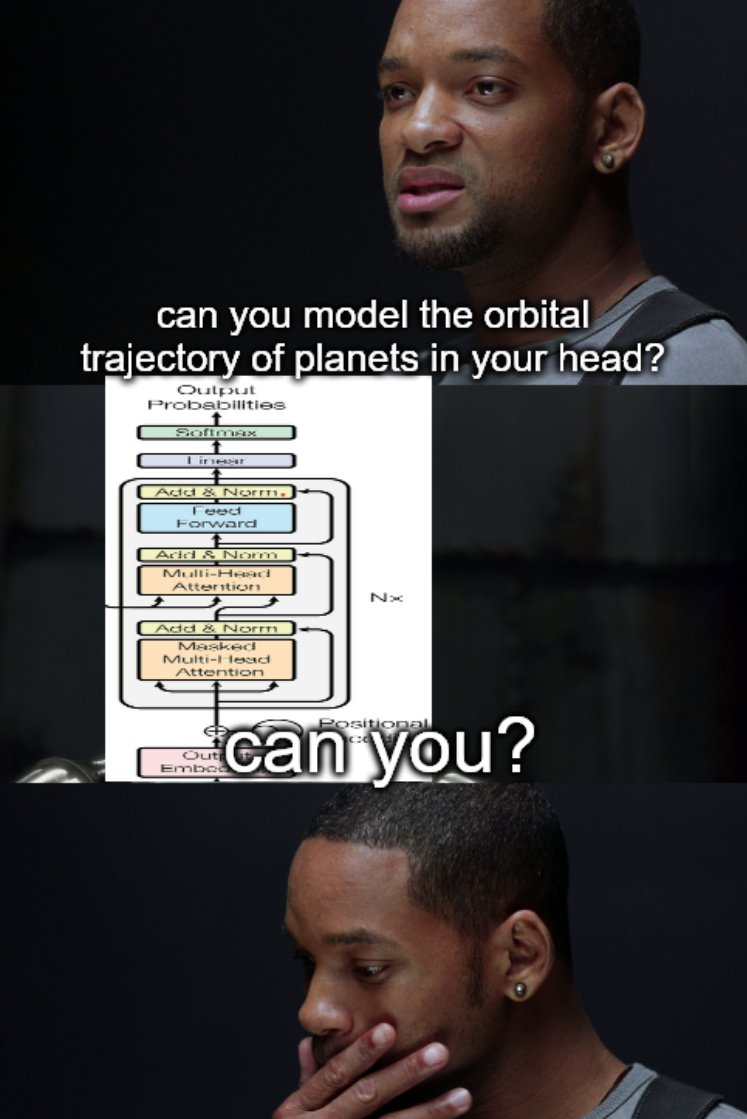

🍎 Training LLMs

- Want to minimize cost of training

Maximize throughput(?)- Data parallelism takes us only so far (McCandlish et al. 2018)…

- Possible directions:

- Large batch training (?)

- new (second order?) optimizers

- Better tokenization schemes (no tokenizers ?)

- Better data (?)

- Alternative architecture(s) (?)

- Diffusion / flow-matching

- Sub-quadratic attention (state space models, …)

- Large batch training (?)

🎯 Goals

We need our implementation1 to be:

- 💯 Correct

- Consistent across systems

- Requires being able to run the same code on multiple different machines

- Independent of hardware and communication library (e.g.

CUDA,ROCm,XPU,CPU,MPS, …)

- 🚀 Scalable

- Performant across thousands of GPUs

- Highly configurable and extensible

- Parallelizable across (tensor, pipeline, sequence) dimension(s)

- Robust against {hardware, network, filesystem, transient} failures2

🏋️ Challenges: In Practice

This is incredibly difficult in practice, due in part to:

- Brand new {hardware, architecture, software}

- Lack of native support in existing frameworks (though getting better!)

- General system stability

+10k Nodes (×1Node12XPU)⇒ +100k XPUs- network performance

- file system stability (impacted by other users !)

- many unexpected difficulties occur at increasingly large scales

- Combinatorial explosion of possible configurations and experiments

- {hyperparameters, architectures, tokenizers, learning rates, …}

💾 Training: 2T Tokens

- To train a fixed model on trillions of tokens requires:

- Aggregating data from multiple different corpora

(e.g. ArXiv, Reddit, StackExchange, GitHub, Wikipedia, etc.) - Sampling each training batch according to a fixed distribution across corpora

- Building indices that map batches of tokens into these files (indexing)

The original implementation was slow:

- Designed to run serially on a single device

- Major bottleneck when debugging data pipeline at scale

- Aggregating data from multiple different corpora

🍹 Blending Data, Efficiently

📉 Loss Curve: Training AuroraGPT-7B on 2T Tokens

✨ Features

- 🕸️ Parallelism:

- {data, tensor, pipeline, sequence, …}

- ♻️ Checkpoint Converters:

- Megatron ⇄ 🤗 HF ⇄ ZeRO ⇄ Universal

- 🔀 DeepSpeed Integration:

- ZeRO Offloading

- Activation checkpointing

- AutoTP (WIP)

- ability to leverage features from DeepSpeed community

✨ Features (even more!)

- 🧗 Optimizers1:

- Support for many different optimizers:

- Distributed Shampoo, Muon, Adopt, Sophia, Lamb, GaLORE, ScheduleFree, …

- See full list

- Large batch training

- Support for many different optimizers:

- 📊 Experiment Tracking:

- Automatic experiment and metric tracking with Weights & Biases

🔭 LLMs for Science

ChatGPT: explain this image

🤔 Evaluating Models on Scientific Applications

- What to measure?

- Knowledge Extraction, Retrieval, Distillation, Synthesis: LLM is provided a question or instruction and a truthful answer is expected

- Text Grounded: Answers are expected to be fully grounded on peer-reviewed references to support responses

- Reasoning: LLMs are expected to solve deductive (prove a theory or hypothesis from formal logic and observations), inductive (validate / explain observations from theories) problems

- Creativity: A creative answer is expected from a question or instruction

- thoughtful dialogue, coding, etc.

⚖️ Evaluating FM Skills for Science: Criteria

- Criteria for all of the above:

- Correctness of facts

- Accuracy of solutions and inferences

- Reliability consistently good in quality or performance

- Speed how fast to produce a response

- # shots how many examples are needed for good quality

- Extent of prompt engineering

🧬 MProt-DPO: Scaling Results

~ 4 EFLOPS @ Aurora

38,400 XPUs

= 3200 [node] x 12 [XPU / node]🔔 Gordon Bell Finalist1:

🧬 MProt-DPO: Scaling Results

🚂 Loooooooooong Sequence Lengths

- Working with Microsoft/DeepSpeed team to enable longer sequence lengths (context windows) for LLMs

- See my blog post for additional details

SEQ_LEN for both 25B and 33B models (See: Song et al. (2023))

📓 References

- argonne-lcf /

Megatron-DeepSpeed

For the largest of large language models. - saforem2 /

ezpz

Distributed training, ezpz. 🍋 - 📊 See my other slides at samforeman.me/talks:

❤️ Thank you!

🙏 Acknowledgements

This research used resources of the Argonne Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.

📑 Bibliography

- Refs:

- Wei et al. (2022)

- Animations from The Illustrated Transformer