AuroraGPT: Training Foundation Models on Supercomputers

Sam Foreman

@ Argonne National Laboratory

2025-12-16

🧰 AuroraGPT: Toolbox

- Datasets and data pipelines (how do we deal with scientific data?)

- Software infrastructure and workflows (scalable, robust, extensible)

- Evaluation of state-of-the-art LLM Models (how do they perform on scientific tasks?)

🍋 ezpz

saforem2/ezpz

Write once, run anywhere

🚂 Training

argonne-lcf/Megatron-DeepSpeed

For the largest of large language models

🏃♂️ Running

argonne-lcf/inference-endpoints

Inference endpoints for LLMs, hosted @ ALCF

👥 Team Leads

🤝 Teams

- Planning

- Data Prep

- Accumulate 20+ T tokens of high-quality scientific text and structured data

- Models / Training1

- Train (entirely from scratch) a series of models on publicly available data

- Evaluation

- Skills, trustworthiness, safety, robustness, privacy, machine ethics

- Post-Training

- Fine-tuning, alignment

- Inference

- Model serving, API development / public-facing web services

- Distribution

- Licensing, generating and distributing artifacts for public consumption

- Communication

🏋️ Challenges

This is incredibly difficult in practice, due in part to:

- Brand new {hardware, architecture, software}

- Lack of native support in existing frameworks (though getting better!)

- General system stability

+10k Nodes (×1Node12XPU)⇒ +100k XPUs- network performance

- file system stability (impacted by other users !)

- many unexpected difficulties occur at increasingly large scales

- Combinatorial explosion of possible configurations and experiments

- {hyperparameters, architectures, tokenizers, learning rates, …}

💾 AuroraGPT: Training

- To train a fixed model on trillions of tokens requires:

- Aggregating data from multiple different corpora

(e.g. ArXiv, Reddit, StackExchange, GitHub, Wikipedia, etc.) - Sampling each training batch according to a fixed distribution across corpora

- Building indices that map batches of tokens into these files (indexing)

The original implementation was slow:

- Designed to run serially on a single device

- Major bottleneck when debugging data pipeline at scale

- Aggregating data from multiple different corpora

🍹 AuroraGPT: Blending Data, Efficiently

📉 Training AuroraGPT-7B on 2T Tokens

📉 Training AuroraGPT-2B on 7T Tokens

✨ Features

argonne-lcf/Megatron-DeepSpeed

- 🕸️ Parallelism:

- {data, tensor, pipeline, sequence, …}

- ♻️ Checkpoint Converters:

- Megatron ⇄ 🤗 HF ⇄ ZeRO ⇄ Universal

- 🔀 DeepSpeed Integration:

- ZeRO Offloading

- Activation checkpointing

- AutoTP (WIP)

- ability to leverage features from DeepSpeed community

✨ Features (even more!)

- 🧗 Optimizers1:

- Support for many different optimizers:

- Distributed Shampoo, Muon, Adopt, Sophia, Lamb, GaLORE, ScheduleFree, …

- See full list

- Large batch training

- Support for many different optimizers:

- 📊 Experiment Tracking:

- Automatic experiment and metric tracking with Weights & Biases

🧬 MProt-DPO

- Finalist: SC’24 ACM Gordon Bell Prize

- One of the first protein design toolkits that integrates:

- Text, (protein/gene) sequence, structure/conformational sampling modalities to build aligned representations for protein sequence-function mapping

🧬 Scaling Results (2024)

~ 4 EFLOPS @ Aurora

38,400 XPUs

= 3200 [node] x 12 [XPU / node]-

- MProt-DPO: Breaking the ExaFLOPS Barrier for Multimodal Protein Design Workflows (Dharuman et al. (2024))

🧬 MProt-DPO: Scaling Results

🚂 Loooooooooong Sequence Lengths

- Working with Microsoft/DeepSpeed team to enable longer sequence lengths (context windows) for LLMs

- See my blog post for additional details

SEQ_LEN for both 25B and 33B models (See: Song et al. (2023))

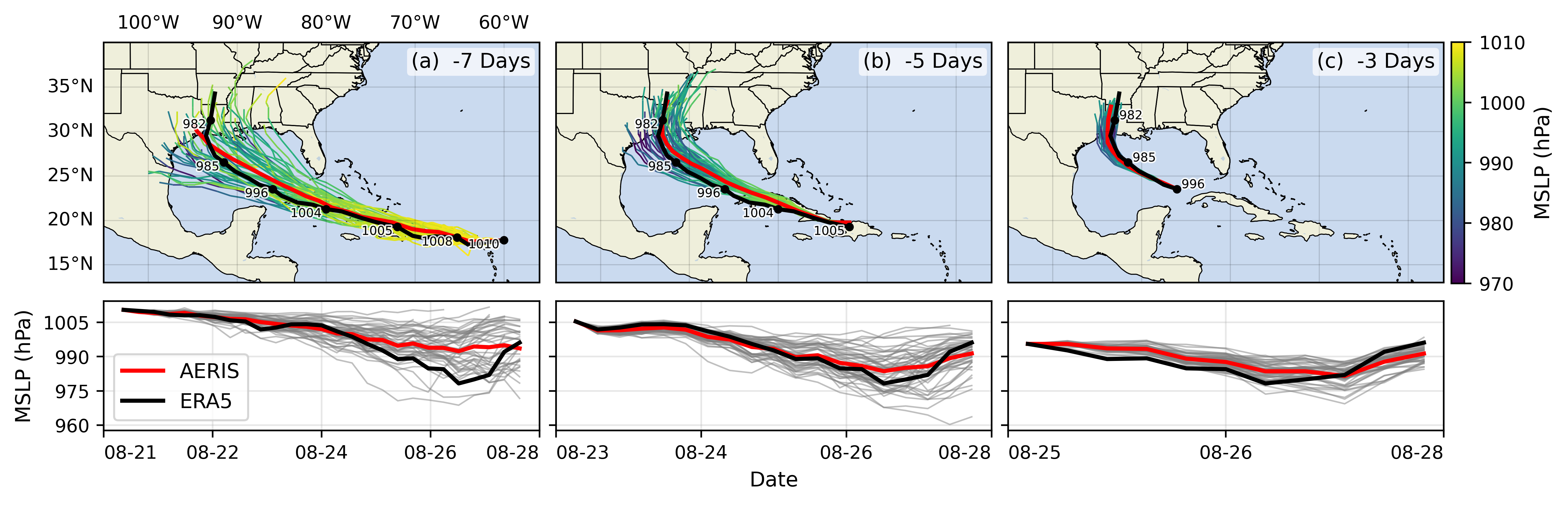

🌎 AERIS (2025)

👀 High-Level Overview of AERIS

➕ Contributions

☔ AERIS

First billion-parameter diffusion model for weather + climate

- Operates at the pixel level (1 × 1 patch size), guided by physical priors

- Medium-range forecast skill:

- Surpasses IFS ENS, competitive with GenCast1

- Uniquely stable on seasonal scales to 90 days

🌀 SWiPe

A novel 3D (sequence-window-pipeline) parallelism strategy for training transformers across high-resolution inputs

- Enables scalable small-batch training on large supercomputers2

- 10.21 ExaFLOPS

- @ 121,000 Intel XPUs (Aurora)

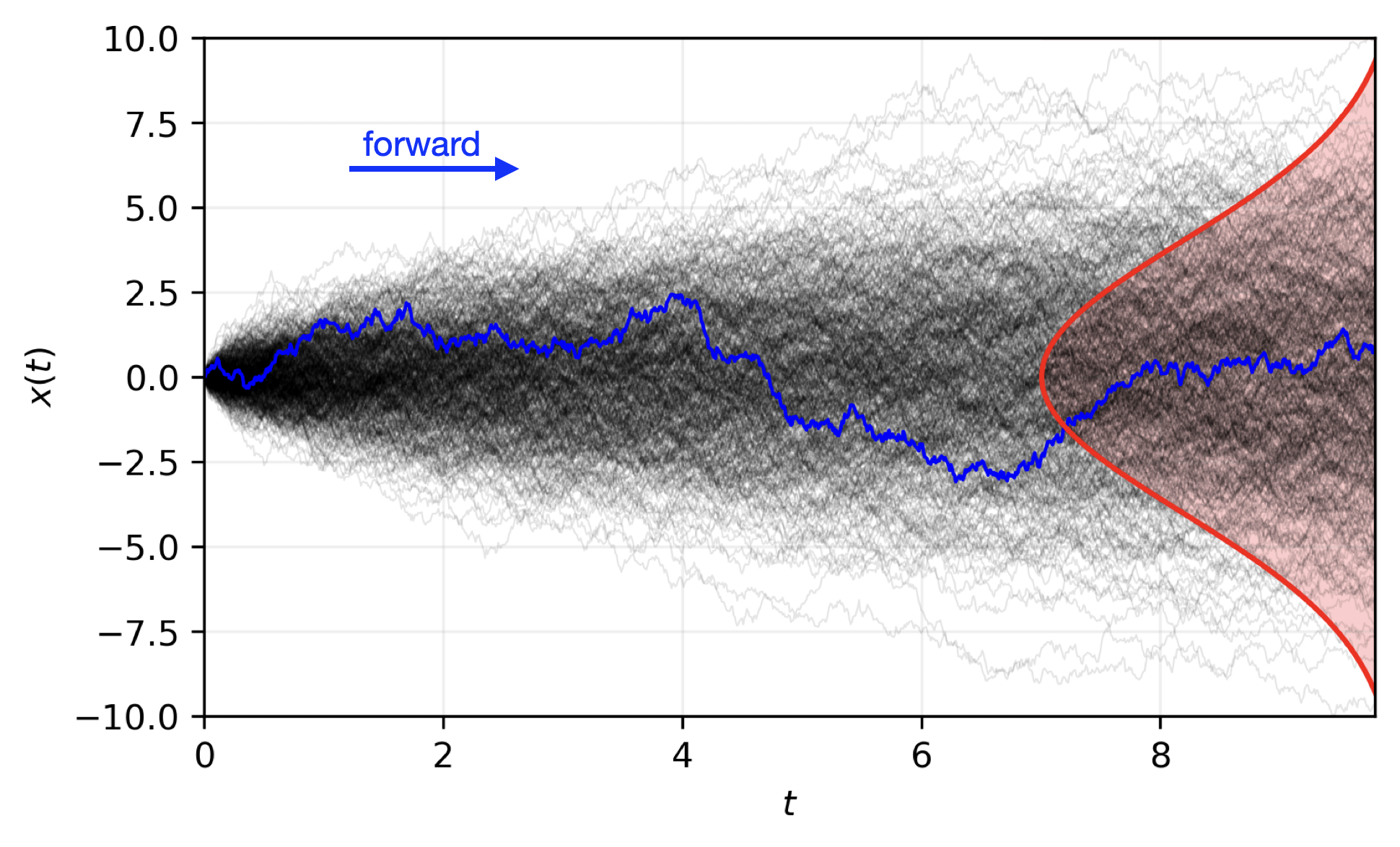

⚠️ Issues with the Deterministic Approach

Transformers: - Deterministic

- Single input → single forecast

Diffusion: - Probabilistic

- Single input → ensemble of forecasts

- Captures uncertainty and variability in weather predictions

- Enables ensemble forecasting for better risk assessment

🎲 Transitioning to a Probabilistic Model

🌀 Sequence-Window-Pipeline Parallelism SWiPe

SWiPeis a novel parallelism strategy for Swin-based Transformers- Hybrid 3D Parallelism strategy, combining:

- Sequence parallelism (

SP) - Window parallelism (

WP) - Pipeline parallelism (

PP)

- Sequence parallelism (

🚀 AERIS: Scaling Results

- 10 EFLOPs (sustained) @ 120,960 GPUs

- See (Hatanpää et al. (2025)) for additional details

- arXiv:2509.13523

🌪️ Hurricane Laura

📓 References

❤️ Acknowledgements

This research used resources of the Argonne Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.