Training Foundation Models on Supercomputers

Sam Foreman

@ Georgia Institute of Technology

2025-10-15

🌐 Distributed Training

🚀 Scaling: Overview

- ✅ Goal:

- Minimize: Cost (i.e. amount of time spent training)

- Maximize: Performance

📑 Note

See 🤗 Performance and Scalability for more details

🐢 Training on a Single Device

SLOW!: Model size limited by GPU memory

🕸️ Parallelism Strategies

- Data Parallelism

- Split data across workers

- Easiest to implement

- No changes to model

- Model Parallelism

- Split model across workers

- Hybrid Parallelism

- Combine data + model parallelism

- More complex to implement

- Requires changes to model

👬 Training on Multiple GPUs: Data Parallelism

▶️ Data Parallel: Forward Pass

◀️ Data Parallel: Backward Pass

🔄 Collective Communication

- Broadcast: Send data from one node to all other nodes

- Reduce: Aggregate data from all nodes to one node

- AllReduce: Aggregate data from all nodes to all nodes

- Gather: Collect data from all nodes to one node

- AllGather: Collect data from all nodes to all nodes

- Scatter: Distribute data from one node to all other nodes

Reduce

- Perform a reduction on data across ranks, send to individual

🐣 Getting Started: In Practice

- 📦 Distributed Training Frameworks:

- 🍋 saforem2 /

ezpz - 🤖 Megatron-LM

- 🤗 Accelerate

- 🔥 PyTorch

- 🍋 saforem2 /

- 🚀 DeepSpeed

- 🧠 Memory Management:

- FSDP vs. ZeRO

- Activation Checkpointing

- Mixed Precision Training

- Gradient Accumulation

- Offloading to CPU/NVMe

🔄 Keeping things in Sync

Computation stalls during communication !!

Keeping the communication to computation ratio small is important for effective scaling.

📝 Plan of Attack

🚀 Going Beyond Data Parallelism

- ✅ Useful when model fits on single GPU:

- ultimately limited by GPU memory

- model performance limited by size

- ⚠️ When model does not fit on a single GPU:

- Offloading (can only get you so far…):

- Otherwise, resort to model parallelism strategies

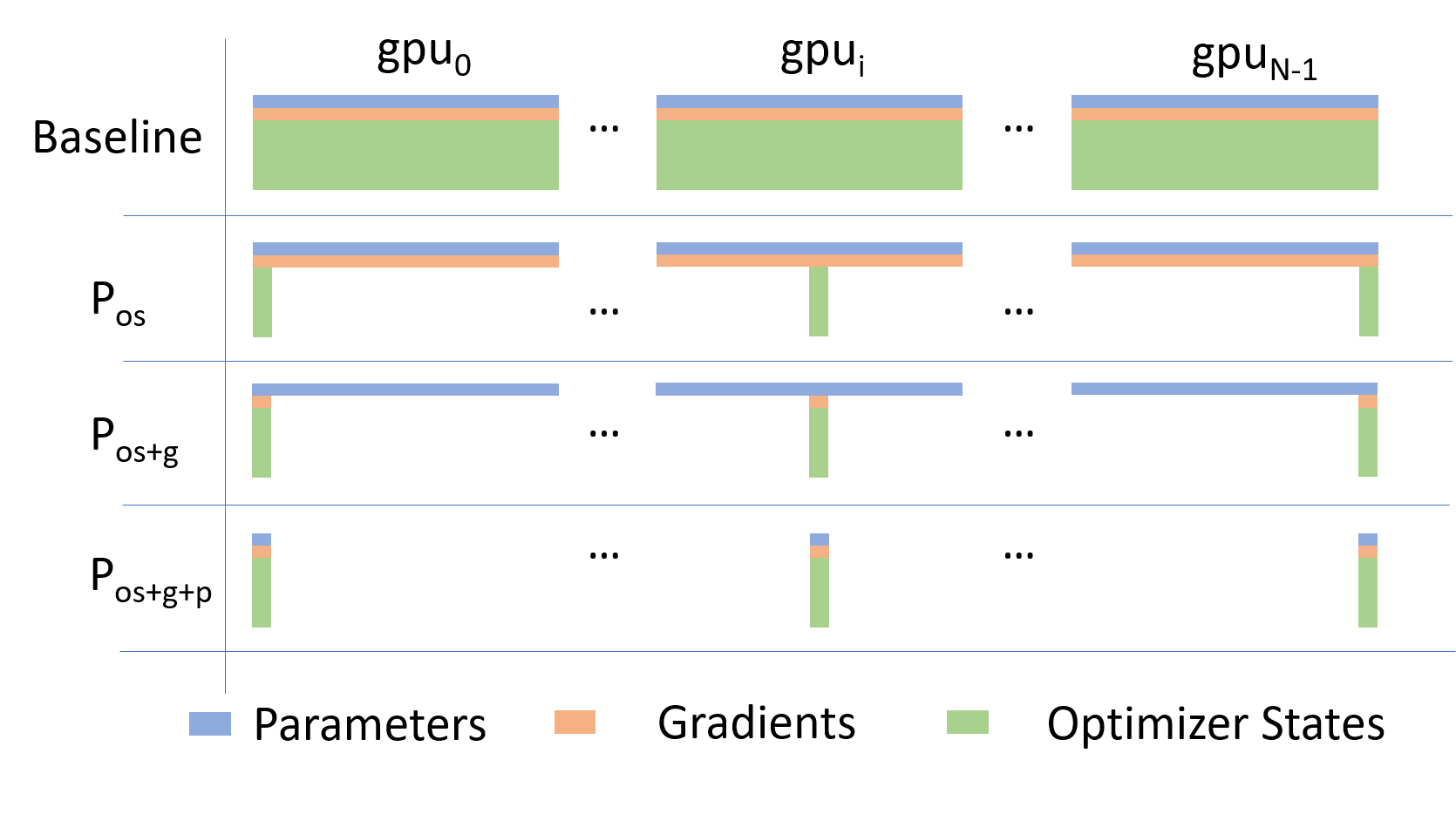

Going beyond Data Parallelism: ZeRO

- Depending on the

ZeROstage (1, 2, 3), we can offload:- Stage 1: optimizer states (Pos)

- Stage 2: gradients + opt. states (Pos+g)

- Stage 3: model params + grads + opt. states (Pos+g+p)

🕸️ Additional Parallelism Strategies

- Tensor (/ Model) Parallelism (

TP): - Pipeline Parallelism (

PP): - Sequence Parallelism (

SP): -

- Supports 4D Parallelism (

DP+TP+PP+SP)

- Supports 4D Parallelism (

Pipeline Parallelism (PP)

- Model is split up vertically (layer-level) across multiple GPUs

- Each GPU:

- has a portion of the full model

- processes in parallel different stages of the pipeline (on a small chunk of the batch)

- See:

Tensor Parallel (TP)

- Each tensor is split up into multiple chunks

- Each shard of the tensor resides on its designated GPU

- During processing each shard gets processed separately (and in parallel) on different GPUs

- synced at the end of the step

- See: 🤗 Model Parallelism for additional details

Tensor Parallel (TP)

- Suitable when the model is too large to fit onto a single device (CPU / GPU)

- Typically more complicated to implement than data parallel training

- This is what one may call horizontal parallelism

- Communication whenever dataflow between two subsets

-

argonne-lcf/Megatron-DeepSpeed - 🤗

huggingface/nanotron

Tensor (/ Model) Parallel Training: Example

Want to compute: y=∑ixiWi=x0∗W0+x1∗W1+x2∗W2

where each GPU has only its portion of the full weights as shown below

- Compute: y0=x0∗W0→

GPU1 - Compute: y1=y0+x1∗W1→

GPU2 - Compute: y=y1+x2∗W2=∑ixiWi ✅

🔭 AI-for-Science

ChatGPT: explain this image

🏗️ Aurora

| Property | Value |

|---|---|

| Racks | 166 |

| Nodes | 10,624 |

| XPUs2 | 127,488 |

| CPUs | 21,248 |

| NICs | 84,992 |

| HBM | 8 PB |

| DDR5c | 10 PB |

🌌 AuroraGPT (2024–)

AuroraGPT: General purpose scientific LLM Broadly trained on a general corpora plus scientific {papers, texts, data}

- Explore pathways towards a “Scientific Assistant” model

- Build with international partners (RIKEN, BSC, others)

- Multilingual English, 日本語, French, German, Spanish

- Multimodal: images, tables, equations, proofs, time series, graphs, fields, sequences, etc

Awesome-LLM

🧪 AuroraGPT: Open Science Foundation Model

🧰 AuroraGPT: Toolbox

- Datasets and data pipelines (how do we deal with scientific data?)

- Software infrastructure and workflows (scalable, robust, extensible)

- Evaluation of state-of-the-art LLM Models (how do they perform on scientific tasks?)

🚂 Training

argonne-lcf/Megatron-DeepSpeed

Large Model Training: Any Scale, Any Accelerator

🏃♂️ Running

argonne-lcf/inference-endpoints

Inference endpoints for LLMs, hosted @ ALCF

🏋️ Challenges: In Practice

This is incredibly difficult in practice, due in part to:

- Brand new {hardware, architecture, software}

- Lack of native support in existing frameworks (though getting better!)

- General system stability

+10k Nodes (×1Node12XPU)⇒ +100k XPUs- network performance

- file system stability (impacted by other users !)

- many unexpected difficulties occur at increasingly large scales

- Combinatorial explosion of possible configurations and experiments

- {hyperparameters, architectures, tokenizers, learning rates, …}

💾 AuroraGPT: Training

- To train a fixed model on trillions of tokens requires:

- Aggregating data from multiple different corpora

(e.g. ArXiv, Reddit, StackExchange, GitHub, Wikipedia, etc.) - Sampling each training batch according to a fixed distribution across corpora

- Building indices that map batches of tokens into these files (indexing)

The original implementation was slow:

- Designed to run serially on a single device

- Major bottleneck when debugging data pipeline at scale

- Aggregating data from multiple different corpora

🍹 AuroraGPT: Blending Data, Efficiently

📉 Loss Curve: Training AuroraGPT-7B on 2T Tokens

✨ Features

- 🕸️ Parallelism:

- {data, tensor, pipeline, sequence, …}

- ♻️ Checkpoint Converters:

- Megatron ⇄ 🤗 HF ⇄ ZeRO ⇄ Universal

- 🔀 DeepSpeed Integration:

- ZeRO Offloading

- Activation checkpointing

- AutoTP (WIP)

- ability to leverage features from DeepSpeed community

✨ Features (even more!)

- 🧗 Optimizers1:

- Support for many different optimizers:

- Distributed Shampoo, Muon, Adopt, Sophia, Lamb, GaLORE, ScheduleFree, …

- See full list

- Large batch training

- Support for many different optimizers:

- 📊 Experiment Tracking:

- Automatic experiment and metric tracking with Weights & Biases

🧬 MProt-DPO

- Finalist: SC’24 ACM Gordon Bell Prize

- One of the first protein design toolkits that integrates:

- Text, (protein/gene) sequence, structure/conformational sampling modalities to build aligned representations for protein sequence-function mapping

🧬 Scaling Results (2024)

~ 4 EFLOPS @ Aurora

38,400 XPUs

= 3200 [node] x 12 [XPU / node]

🧬 MProt-DPO: Scaling Results

🚂 Loooooooooong Sequence Lengths

- Working with Microsoft/DeepSpeed team to enable longer sequence lengths (context windows) for LLMs

- See my blog post for additional details

SEQ_LEN for both 25B and 33B models (See: Song et al. (2023))

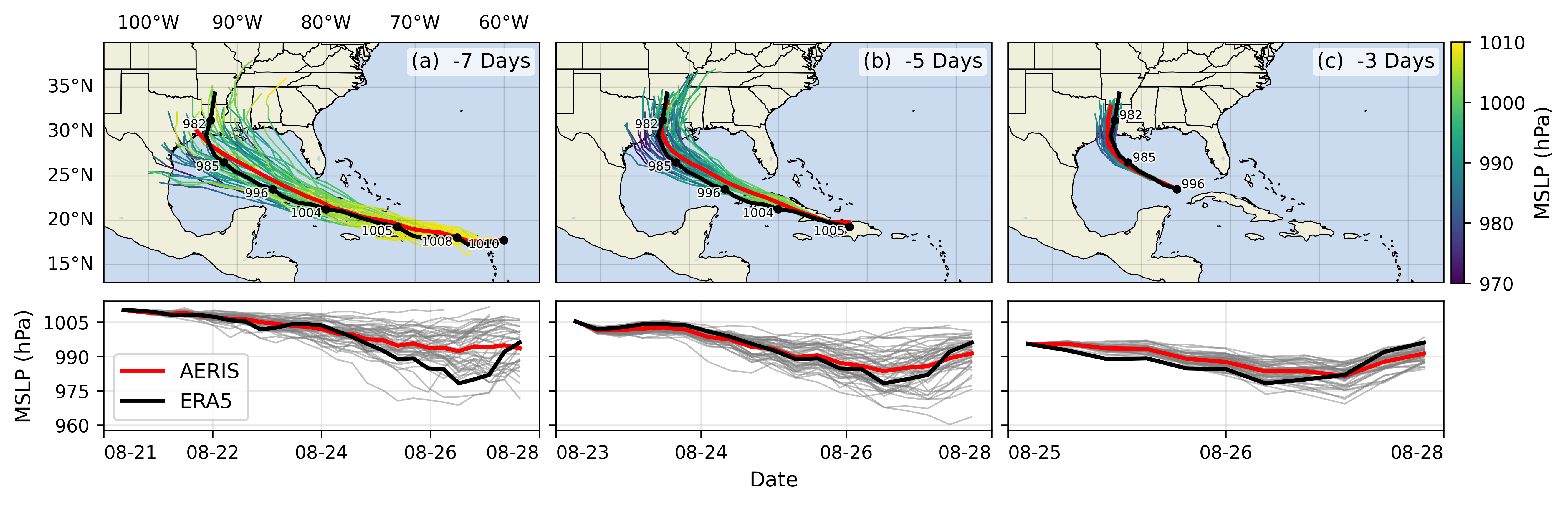

🌎 AERIS (2025)

👀 High-Level Overview of AERIS

➕ Contributions

☔ AERIS

First billion-parameter diffusion model for weather + climate

- Operates at the pixel level (1 × 1 patch size), guided by physical priors

- Medium-range forecast skill:

- Surpasses IFS ENS, competitive with GenCast1

- Uniquely stable on seasonal scales to 90 days

🌀 SWiPe

A novel 3D (sequence-window-pipeline) parallelism strategy for training transformers across high-resolution inputs

- Enables scalable small-batch training on large supercomputers2

- 10.21 ExaFLOPS

- @ 121,000 Intel XPUs (Aurora)

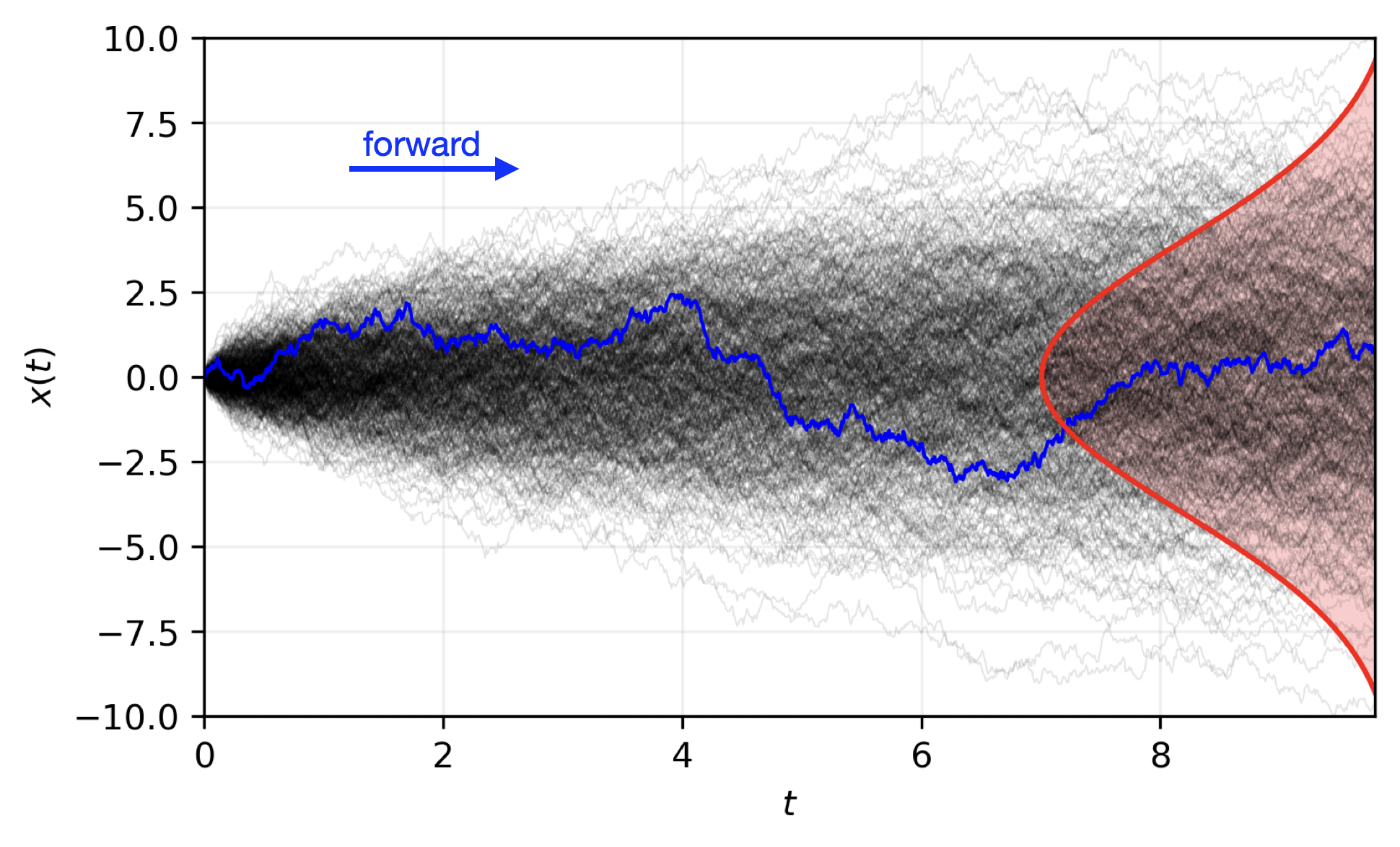

⚠️ Issues with the Deterministic Approach

Transformers: - Deterministic

- Single input → single forecast

Diffusion: - Probabilistic

- Single input → ensemble of forecasts

- Captures uncertainty and variability in weather predictions

- Enables ensemble forecasting for better risk assessment

🎲 Transitioning to a Probabilistic Model

🌀 Sequence-Window-Pipeline Parallelism SWiPe

SWiPeis a novel parallelism strategy for Swin-based Transformers- Hybrid 3D Parallelism strategy, combining:

- Sequence parallelism (

SP) - Window parallelism (

WP) - Pipeline parallelism (

PP)

- Sequence parallelism (

🚀 AERIS: Scaling Results

- 10 EFLOPs (sustained) @ 120,960 GPUs

- See (Hatanpää et al. (2025)) for additional details

- arXiv:2509.13523

🌪️ Hurricane Laura

📓 References

❤️ Acknowledgements

This research used resources of the Argonne Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.